[mc4wp_form id=”2320″]

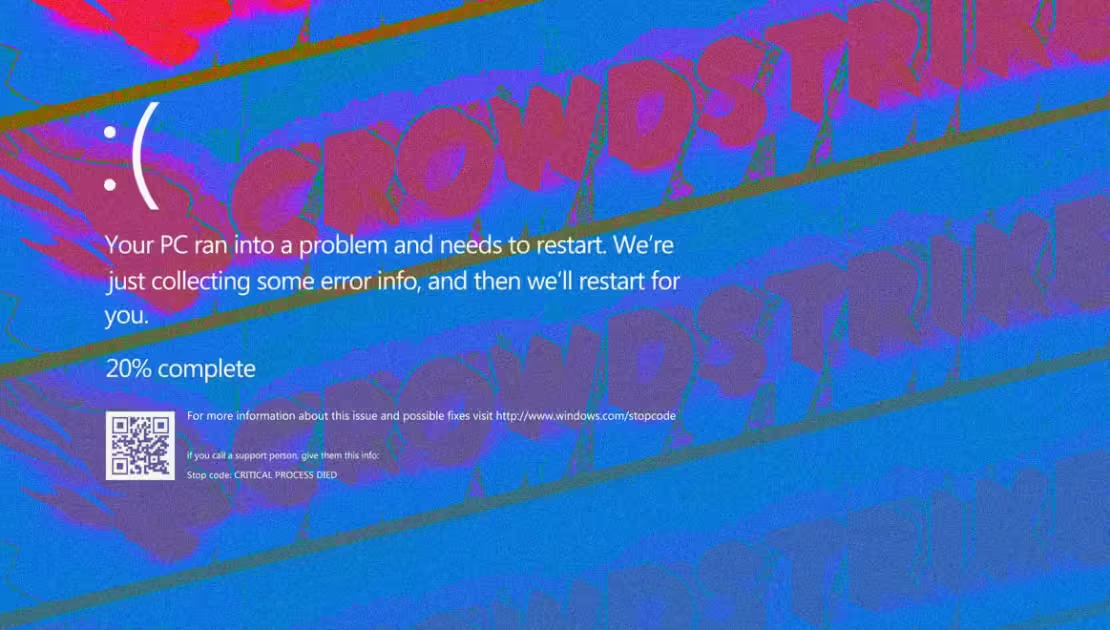

The CrowdStrike butterfly effect

-

July 24, 2024

- Posted by: Evans Asare

The CrowdStrike butterfly effect: cyber pros weigh in on the far-reaching disaster. A few hours without flights, medical procedures, or financial transactions. A day or two without less critical services. And a week or two of work restoring every affected system. Yet the ripples caused by CrowdStrike’s calamitous software update will be felt far beyond these short term disruptions, cybersecurity experts believe.

Cybernews received dozens of insights from leading cybersecurity professionals worldwide. Here are 20 takeaways that paint a sobering picture of our digital dependencies that we think are worth sharing.

On the incident scale

1. “This outage has hit almost everyone. Taxpayer-funded services like airports, hospitals, and schools have been heavily impacted around the world. A huge amount of business has been lost due to downtimes. The amount of money this outage will cost is mind-boggling – we do not know the full extent of the impacts just yet, but this is probably the most costly IT outage in history,” said Alexander Linton, director at Session, the end-to-end encrypted messenger.

2. “That was kind of an apocalypse. That is why there are no ready-made recovery procedures or a fail-safe mechanism,” said Victor Zyamzin, chief business officer at Qrator Labs.

3. “One way to view this is like a large-scale ransomware attack. I’ve talked to several CISOs and CSOs who are considering triggering restore-from-backup protocols instead of manually booting each computer into safe mode, finding the offending CrowdStrike file, deleting it, and rebooting into normal Windows. Companies that haven’t invested in rapid backup solutions are stuck in a catch-22,” said Eric O’Neill, cybersecurity expert, former FBI Counterterrorism & Counterintelligence operative, attorney, and founder of The Georgetown Group and Nexasure AI.

On the root causes

4. “Single points of failure deeply permeate our current internet infrastructure, and hospitals, companies, and the traditional financial system sit on top of a house of cards that can easily collapse,” said Yannik Schrade, CEO and Co-founder of Arcium. “The biggest lesson for us from this global outrage is to not trust but verify. Trust should not be part of the equation with systems this important for healthcare, finance, and infrastructure.”

5. “Unfortunately, it won’t get better. More and more systems are depending on just a few vendors because of the immense consolidation in the cybersecurity market,” said David Brumley, CEO of ForAllSecure and cybersecurity professor at Carnegie Mellon University. “Redundancy is getting harder and harder. Two systems running the same software will both crash together. Instead, we need diversity. That is going against the industry, where there is a massive consolidation among vendors. Google buys Wiz, Cisco buys Duo, and all the other unicorns means that our software reliability stacks are in the hands of just a few meta companies.”

6. “Having a single point of failure in your system means that, eventually, there will probably be a failure,” Alexander Linton said. “Having so much critical infrastructure relying on a centralized service is a huge mistake and something we should try to remedy as an industry going forward.”

Update cycles taken out of the hands of sysadmins

7. “Many security teams don’t realize that their endpoint protection platforms’ signature updates often themselves contain code, further exacerbating the issue. We should expect to see changes in this operating model. For better or worse, CrowdStrike has just shown why this operating model of pushing updates without IT intervention is unsustainable,” said Jake Williams, former NSA hacker, faculty at IANS Research, and VP of R&D at Hunter Strategy.

Who’s to blame?

8. “Ultimately, it’s the vendor (CrowdStrike) that had pushed the changes which broke things… not even the end-user organizations or businesses themselves. Critical infrastructure might have an EDR or XDR solution slapped onto it just to “check the box,” but the scenario where the provider accidentally breaks the infrastructure isn’t one you ever really think of,” said John Hammond, principal security researcher at Huntress. “With all that root-level power and capability, it is especially fringe. A small mistake in code, any accidental misconfiguration, or just simply unexpected behavior can cause the whole computer to crash.”

9. “When you buy a car, it’s been thoroughly tested for safety. CrowdStrike didn’t do enough testing on their software, which resulted in a broken update. That was paired with their worldwide distribution, immediately crashing computers across the internet,” said David Brumley. “CrowdStrike needs to make a radical investment in improving their software testing and get better about incrementally rolling out updates so not everything breaks at once. Organizations need to put pressure on their vendors.”

Follow us on Facebook @cyber1defense communication

10. “Accountability likely rests with CrowdStrike for the faulty deployment. However, organizations also need to take responsibility for having adequate backup and recovery procedures. Both parties play a role in ensuring system reliability and resilience,” Matthew Carr, Co-founder & CTO at Atumcell Group, said.

11. “The world was on notice after the SolarWinds attack, where Russian cyberspies infiltrated the patch update process to send a Trojan update to SolarWinds customers. Following that attack, a Russian cybercrime syndicate deployed a similar attack against Kaseya’s customers. Every company should have learned the lesson about controlling updates, especially CrowdStrike, which was called in to solve both the SolarWinds and Kaseya cyberattacks, said Eric O’Neill,

12. “I hope this doesn’t undermine confidence in cloud-based security solutions. As cybercrime and espionage become more sophisticated and leverage top-tier AI for attacks, rapid deployment of intelligence from the cloud is the only effective response. Consumers have two options: rely on cloud-based technologies or air-gap their systems and dust off their old typewriters,” O’Neill added.

The lackluster response

13. “The businesses would have disaster recovery plans, which, unfortunately, have remained more of a paper-based exercise than a plan that was tried and tested at scale across the key simulation scenarios,” Alina Timofeeva, strategic advisor in technology. “It is very key for Companies to invest in operational resilience, which is broader than just technology. It would cover technology, data, third parties, processes, and people.”

14. “Companies that keep their infrastructure in the cloud coped with the problem quicker than others thanks to virtualization and API-based scripts. For AWS-hosted and Microsoft Azure-hosted virtual machines, the instructions are usually published in a matter of hours. Moreover, it does not take much time to implement those instructions compared to doing that for a full park of bare metal servers,” said Victor Zyamzin. “Companies that backup regularly probably were also less impacted.”

On potential changes

15. “Computer scientists have long known what to do here: you build in thorough, automated stress testing for every software change. We call this fuzzing. You pair that with incremental updates so that if something slips through the cracks, you can detect it early without the entire internet going down. Sadly, improving software security testing is the first thing companies skimp on. New features, or worse, cost savings, mean we’ve globally underinvested in software reliability,” said David Brumley.

16. “I would particularly encourage companies to look much deeper into their current reliance on cloud providers and mitigate the concentration risk both internally within the company and across the world to minimize the impact on the material services should a major IT Disaster occur,” said Alina Timofeeva.

17. The only one that comes to mind is the incremental basis of deploying future updates from CrowdStrike, meaning that the next updates could be rolled out by the first 1% of devices in the company, then by 5% in a few days, then we wait a week, and so on,” shared Victor Zyamzin. “And we recommend deploying this way not only updates from CrowdStrike but from all vendors that have an impact on your infrastructure.”

Read also: Global cyber outage grounds flights and disrupts businesses.

18. “The concept of EDR, which relies on frequent policy updates based on continuously discovered new attack patterns, is fundamentally flawed: It requires frequent software updates, which may contain bugs that risk the system’s business continuity,” David Barzilai, co-founder of Karamba Security, shared. “Mission-critical applications that run as closed systems (such as airport and hospital servers, vehicle systems, medical devices, printers, etc.) should be hardened to ensure they only run authorized programs and deterministically prevent any foreign code from executing. This approach eliminates the need for frequent policy updates and proactively blocks any attempts to run foreign code, such as malware.”

19. “Replacing an EDR or XDR vendor could take quarters, if not years. Plus, you may change vendors, but who could guarantee that the new partner would not fail too?” said Victor Zyamzin. “This situation would push more companies to switch from the endpoint security approach to the zero trust security approach. They, in fact, might make a transition to actual cloud-based security solutions, meaning they will put less trust in the endpoints and more in cloud security. Therefore, they would need cloud-based security solutions. If 20% of companies would do that, it would be a fantastic win for our industry. But I believe only 5-15% would actually go for that.”

On regulation

20. “I think that the more reasonable questions would be: what could regulators not do? The thing is that every company develops a risk model. It helps to choose what kind of protection to install and invest in. They are satisfied with their protection. But then the regulator comes and says: ‘We did some research and discovered that only 60 percent of companies use antivirus. Therefore, we decided that installation of antivirus will be mandatory from now on.’

They do not check if all businesses actually need that antivirus. Therefore, we see the situation when companies are forced to buy CrowdStrike or any other solutions based on cheaper prices just to make away with regulators. I believe that maybe from 50% to 90% of companies affected today wouldn’t be affected at all if they had not installed CrowdStrike or other EDR and XDR software products in the first place just for compliance reasons,” said Victor Zyamzin.

[…] Read also: The CrowdStrike butterfly effect. […]

[…] Must read also: The CrowdStrike butterfly effect. […]

http://pinupaz.top/# pin-up casino giris

purchase Modafinil without prescription: modafinil legality – modafinil legality

safe online pharmacy: legit Viagra online – best price for Viagra

modafinil legality: doctor-reviewed advice – purchase Modafinil without prescription

Cialis without prescription: best price Cialis tablets – FDA approved generic Cialis

secure checkout Viagra: Viagra without prescription – safe online pharmacy

generic sildenafil 100mg: best price for Viagra – buy generic Viagra online

legit Viagra online: same-day Viagra shipping – legit Viagra online

legal Modafinil purchase: doctor-reviewed advice – modafinil 2025

FDA approved generic Cialis best price Cialis tablets reliable online pharmacy Cialis

best price for Viagra safe online pharmacy or discreet shipping

п»їhttps://www.google.com/url?q=https://maxviagramd.shop best price for Viagra

safe online pharmacy Viagra without prescription and trusted Viagra suppliers trusted Viagra suppliers

Modafinil for sale verified Modafinil vendors or legal Modafinil purchase

https://cse.google.com.ua/url?q=https://modafinilmd.store legal Modafinil purchase

buy modafinil online modafinil 2025 and purchase Modafinil without prescription modafinil 2025

cheap Cialis online affordable ED medication or generic tadalafil

https://www.goodbusinesscomm.com/siteverify.php?ref=stp&site=zipgenericmd.com/collections/somnuz-mattress::: reliable online pharmacy Cialis

best price Cialis tablets online Cialis pharmacy and secure checkout ED drugs generic tadalafil

generic tadalafil: online Cialis pharmacy – reliable online pharmacy Cialis

order Viagra discreetly: secure checkout Viagra – no doctor visit required

http://modafinilmd.store/# legal Modafinil purchase

reliable online pharmacy Cialis affordable ED medication or Cialis without prescription

http://www.div2000.com/SpecialFunctions/NewSiteReferences.asp?NwSiteURL=https://zipgenericmd.com online Cialis pharmacy

Cialis without prescription online Cialis pharmacy and order Cialis online no prescription discreet shipping ED pills

verified Modafinil vendors: legal Modafinil purchase – Modafinil for sale

Modafinil for sale modafinil 2025 Modafinil for sale

no doctor visit required: trusted Viagra suppliers – best price for Viagra

generic tadalafil: reliable online pharmacy Cialis – discreet shipping ED pills

https://zipgenericmd.com/# generic tadalafil

FDA approved generic Cialis: online Cialis pharmacy – online Cialis pharmacy

discreet shipping ED pills: secure checkout ED drugs – best price Cialis tablets

verified Modafinil vendors: modafinil pharmacy – doctor-reviewed advice

order Cialis online no prescription Cialis without prescription cheap Cialis online

best price Cialis tablets buy generic Cialis online or order Cialis online no prescription

http://distributors.hrsprings.com/?URL=zipgenericmd.com discreet shipping ED pills

best price Cialis tablets order Cialis online no prescription and affordable ED medication best price Cialis tablets

generic sildenafil 100mg Viagra without prescription or same-day Viagra shipping

https://images.google.ae/url?sa=t&url=https://maxviagramd.shop discreet shipping

generic sildenafil 100mg best price for Viagra and trusted Viagra suppliers fast Viagra delivery

Modafinil for sale doctor-reviewed advice or modafinil 2025

http://www.sfghfghfdg.appspot.com/url?q=https://modafinilmd.store modafinil 2025

doctor-reviewed advice modafinil legality and modafinil pharmacy legal Modafinil purchase

http://modafinilmd.store/# verified Modafinil vendors

order Cialis online no prescription: FDA approved generic Cialis – best price Cialis tablets

safe modafinil purchase: verified Modafinil vendors – legal Modafinil purchase

order Cialis online no prescription: reliable online pharmacy Cialis – reliable online pharmacy Cialis

generic sildenafil 100mg buy generic Viagra online buy generic Viagra online

https://maxviagramd.shop/# fast Viagra delivery

Modafinil for sale: Modafinil for sale – purchase Modafinil without prescription

Viagra without prescription: same-day Viagra shipping – secure checkout Viagra

safe modafinil purchase: purchase Modafinil without prescription – modafinil 2025

doctor-reviewed advice modafinil pharmacy Modafinil for sale

discreet shipping order Viagra discreetly or safe online pharmacy

https://www.google.lv/url?q=https://maxviagramd.shop legit Viagra online

order Viagra discreetly safe online pharmacy and same-day Viagra shipping buy generic Viagra online

modafinil pharmacy modafinil pharmacy or verified Modafinil vendors

http://pornorasskazy.com/forum/away.php?s=https://modafinilmd.store doctor-reviewed advice

legal Modafinil purchase legal Modafinil purchase and modafinil legality safe modafinil purchase

secure checkout ED drugs: reliable online pharmacy Cialis – generic tadalafil

same-day Viagra shipping: same-day Viagra shipping – order Viagra discreetly

cheap Viagra online: secure checkout Viagra – safe online pharmacy

purchase Modafinil without prescription doctor-reviewed advice safe modafinil purchase

FDA approved generic Cialis FDA approved generic Cialis or Cialis without prescription

https://cse.google.sm/url?q=https://zipgenericmd.com cheap Cialis online

cheap Cialis online cheap Cialis online and generic tadalafil affordable ED medication

https://modafinilmd.store/# safe modafinil purchase

Cialis without prescription: cheap Cialis online – secure checkout ED drugs

FDA approved generic Cialis order Cialis online no prescription or secure checkout ED drugs

http://www.badmoon-racing.jp/frame/?url=https://zipgenericmd.com/ secure checkout ED drugs

online Cialis pharmacy reliable online pharmacy Cialis and secure checkout ED drugs order Cialis online no prescription

buy modafinil online: buy modafinil online – purchase Modafinil without prescription

generic tadalafil FDA approved generic Cialis generic tadalafil

buy modafinil online: purchase Modafinil without prescription – purchase Modafinil without prescription

order Viagra discreetly buy generic Viagra online or order Viagra discreetly

http://ashukindvor.ru/forum/away.php?s=http://maxviagramd.shop buy generic Viagra online

Viagra without prescription no doctor visit required and legit Viagra online discreet shipping

buy modafinil online Modafinil for sale or buy modafinil online

http://images.google.co.th/url?q=https://modafinilmd.store verified Modafinil vendors

doctor-reviewed advice purchase Modafinil without prescription and safe modafinil purchase modafinil legality

legal Modafinil purchase: buy modafinil online – modafinil 2025

https://zipgenericmd.com/# discreet shipping ED pills

FDA approved generic Cialis: cheap Cialis online – FDA approved generic Cialis

FDA approved generic Cialis: order Cialis online no prescription – cheap Cialis online

no doctor visit required fast Viagra delivery generic sildenafil 100mg

no doctor visit required: best price for Viagra – same-day Viagra shipping

https://maxviagramd.shop/# legit Viagra online

buy generic Cialis online order Cialis online no prescription or Cialis without prescription

https://www.google.com.kh/url?q=https://zipgenericmd.com affordable ED medication

generic tadalafil affordable ED medication and online Cialis pharmacy buy generic Cialis online

Cialis without prescription FDA approved generic Cialis or <a href=" http://intra.etinar.com/xampp/phpinfo.php?a=Viagra+generic “>order Cialis online no prescription

http://www.taskmanagementsoft.com/bitrix/redirect.php?event1=tm_sol&event2=task-tour-flash&goto=https://zipgenericmd.com affordable ED medication

discreet shipping ED pills Cialis without prescription and generic tadalafil online Cialis pharmacy

amoxicillin brand name: Amo Health Care – where can you get amoxicillin

Amo Health Care: Amo Health Care – Amo Health Care

buy amoxicillin online mexico: amoxicillin for sale online – buy amoxil

http://prednihealth.com/# PredniHealth

PredniHealth buy prednisone tablets uk 40 mg daily prednisone

cost of amoxicillin prescription: amoxicillin online purchase – order amoxicillin online no prescription

PredniHealth: PredniHealth – canada buy prednisone online

Amo Health Care: amoxicillin 500mg pill – how much is amoxicillin

https://prednihealth.com/# PredniHealth

PredniHealth: buy prednisone 40 mg – PredniHealth

amoxicillin capsules 250mg: amoxicillin cephalexin – amoxicillin online canada

buy amoxicillin Amo Health Care buy amoxil

PredniHealth: prednisone 10mg tablet price – PredniHealth

https://clomhealth.com/# order cheap clomid now

prednisone 20mg for sale: PredniHealth – PredniHealth

buy prednisone from canada: PredniHealth – 50 mg prednisone from canada

how to get clomid pills: Clom Health – where to get clomid pills

https://amohealthcare.store/# Amo Health Care

can i buy clomid without insurance how can i get clomid tablets or how to get cheap clomid for sale

https://clients1.google.com.bz/url?q=https://clomhealth.com where buy clomid without a prescription

buying cheap clomid without dr prescription can i purchase generic clomid without rx and how can i get cheap clomid for sale how to buy cheap clomid without prescription

medicine amoxicillin 500 amoxicillin 500mg pill or amoxicillin 500mg price canada

https://images.google.pt/url?sa=t&url=https://amohealthcare.store amoxicillin 500 mg price

purchase amoxicillin online buy amoxicillin 250mg and cost of amoxicillin 875 mg purchase amoxicillin online without prescription

Amo Health Care: amoxicillin 825 mg – buying amoxicillin online

cost cheap clomid without prescription: Clom Health – where to get clomid without insurance

how to buy cheap clomid without prescription: Clom Health – where to buy generic clomid without a prescription

can i order generic clomid now Clom Health can i buy cheap clomid price

https://prednihealth.com/# price of prednisone tablets

Amo Health Care: Amo Health Care – Amo Health Care

Amo Health Care: amoxicillin price canada – Amo Health Care

prednisone 10mg for sale can you buy prednisone without a prescription or generic over the counter prednisone

https://images.google.lu/url?q=https://prednihealth.shop no prescription online prednisone

prednisone for sale online prednisone without prescription and prednisone 5 tablets buy prednisone 20mg

https://clomhealth.shop/# how to buy clomid

where can i buy clomid without rx cost of clomid for sale or can i buy generic clomid pills

https://maps.google.com.ai/url?q=https://clomhealth.com can i get clomid pills

where can i buy cheap clomid without rx how can i get generic clomid without dr prescription and how can i get clomid online where can i buy clomid prices

buy amoxicillin order amoxicillin uk or where to buy amoxicillin pharmacy

http://www.google.sr/url?q=https://amohealthcare.store buy cheap amoxicillin online

amoxicillin where to get over the counter amoxicillin and amoxicillin no prescription buy amoxicillin 250mg

where to get generic clomid pills: Clom Health – get generic clomid no prescription

buy 40 mg prednisone: prednisone canada – PredniHealth

https://clomhealth.com/# where to buy clomid online

amoxicillin script Amo Health Care Amo Health Care

cost of prednisone 5mg tablets: PredniHealth – prednisone 12 tablets price

over the counter prednisone medicine: PredniHealth – canada buy prednisone online

https://tadalaccess.com/# online pharmacy cialis

generic cialis from india: shop for cialis – buy cialis no prescription australia

what possible side effect should a patient taking tadalafil report to a physician quizlet: cialis tadalafil 5mg once a day – cialis 5mg daily

https://tadalaccess.com/# cialis where to buy in las vegas nv

canadian cialis online: TadalAccess – cialis street price

cialis indien bezahlung mit paypal TadalAccess why is cialis so expensive

cialis doesnt work for me: cialis 5mg side effects – tadalafil troche reviews

average dose of tadalafil: sunrise pharmaceutical tadalafil – can you drink wine or liquor if you took in tadalafil

https://tadalaccess.com/# order cialis online

cialis tubs buying cialis online canada drug cialis

cialis savings card: does cialis shrink the prostate – cialis com coupons

cialis free samples: cialis overnight shipping – tadalafil eli lilly

https://tadalaccess.com/# buy cialis overnight shipping

where to buy cialis online for cheap Tadal Access sunrise pharmaceutical tadalafil

buy cialis 20mg: TadalAccess – tadalafil eli lilly

canadian cialis online can you drink alcohol with cialis or cialis experience forum

https://cse.google.am/url?sa=t&url=https://tadalaccess.com when should you take cialis

tadalafil 5 mg tablet where to buy tadalafil in singapore and online cialis australia max dosage of cialis

cialis for bph insurance coverage: cialis 20mg tablets – sildenafil and tadalafil

https://tadalaccess.com/# cialis 10mg ireland

tadalafil long term usage: cialis experience reddit – cialis high blood pressure

when does tadalafil go generic buy cialis without a prescription or cialis 100 mg usa

http://www.e-anim.com/test/E_GuestBook.asp?a=buy+teva+generic+viagra cialis superactive

cialis tadalafil 20 mg cialis side effects and cialis online overnight shipping is tadalafil peptide safe to take

does cialis lower your blood pressure TadalAccess cialis 30 day free trial

cialis over the counter at walmart cialis no perscrtion or cialis generic cvs

https://www.google.mu/url?sa=t&url=https://tadalaccess.com buy cialis in canada

online cialis prescription tadalafil generic 20 mg ebay and cialis and adderall cialis for prostate

cialis logo: cialis 10mg reviews – tadalafil dose for erectile dysfunction

https://tadalaccess.com/# over the counter cialis walgreens

cialis patent expiration date: cialis when to take – cialis dosage for ed

cialis one a day TadalAccess buy cialis without prescription

achats produit tadalafil pour femme en ligne: TadalAccess – cialis drug

https://tadalaccess.com/# cialis interactions

purchasing cialis buy a kilo of tadalafil powder or cialis 20 mg

https://www.google.com.pg/url?q=https://tadalaccess.com how to get cialis for free

sunrise remedies tadalafil how to get cialis for free and does cialis make you last longer in bed how to buy cialis

vardenafil vs tadalafil: buy liquid tadalafil online – cialis buy online

cialis manufacturer coupon free trial tadalafil medication or cialis soft tabs

https://maps.google.ae/url?q=https://tadalaccess.com order cialis from canada

tadalafil cialis what is cialis pill and cialis 100 mg usa great white peptides tadalafil

best time to take cialis cialis side effect is generic cialis available in canada

cialis stories is there a generic cialis available or cialis blood pressure

http://jesuschrist.ru/chat/redir.php?url=http://tadalaccess.com tadalafil (megalis-macleods) reviews

cialis 5mg price walmart when will cialis become generic and tadalafil cheapest online buy voucher for cialis daily online

teva generic cialis: Tadal Access – cialis time

https://tadalaccess.com/# cialis daily dose

what is cialis for: TadalAccess – find tadalafil

tadalafil and sildenafil taken together Tadal Access cialis 5 mg for sale

buy cheap cialis online with mastercard: TadalAccess – cialis cheapest price

https://tadalaccess.com/# cialis when to take

cialis price walmart cialis for sale in canada or buy cialis online in austalia

http://ass-media.de/wbb2/redir.php?url=http://tadalaccess.com/ cialis generic timeline 2018

tadalafil best price 20 mg what is tadalafil made from and cialis recreational use cialis is for daily use

vidalista 20 tadalafil tablets: Tadal Access – is there a generic cialis available?

vigra vs cialis: Tadal Access – buy tadalafil online paypal

cheap cialis canada Tadal Access cialis best price

buy cialis toronto snorting cialis or cialis and nitrates

https://maps.google.com.sb/url?q=https://tadalaccess.com tadalafil (megalis-macleods) reviews

purchase cialis cipla tadalafil review and cialis online without prescription buy tadalafil reddit

https://tadalaccess.com/# canadian pharmacy cialis brand

cialis before and after photos cialis package insert or cheapest cialis online

http://www.wzdq.cc/go.php?url=http://tadalaccess.com cialis prescription online

cialis generic versus brand name cheap cialis generic online and what does cialis look like tadalafil online paypal

what is cialis good for: buy cialis canadian – when will generic tadalafil be available

cialis alternative: Tadal Access – online tadalafil

https://tadalaccess.com/# cialis vs flomax

online cialis when is the best time to take cialis cialis cost per pill

sanofi cialis otc tadalafil from nootropic review or cialis super active vs regular cialis

https://27.glawandius.com/index/d2?diff=0&source=og&campaign=13142&content=&clickid=y0vzpup0zwsnl3yj&aurl=https://tadalaccess.com best price cialis supper active

too much cialis tadalafil online canadian pharmacy and cialis priligy online australia buying cialis without a prescription

mantra 10 tadalafil tablets: TadalAccess – cialis experience forum

cheap tadalafil 10mg: tadalafil generico farmacias del ahorro – active ingredient in cialis

https://tadalaccess.com/# order cialis canada

cialis paypal price of cialis or tadalafil oral jelly

https://www.google.co.id/url?sa=t&url=https://tadalaccess.com cialis sell

cialis and poppers cialis canada price and mambo 36 tadalafil 20 mg cialis advertisement

cialis generic timeline free coupon for cialis or cialis side effects forum

http://alkrsan.net/forum/go.php?url=//tadalaccess.com san antonio cialis doctor

what happens if a woman takes cialis cialis commercial bathtub and what cialis how much does cialis cost per pill

cialis once a day: TadalAccess – cialis com coupons

cialis commercial bathtub: TadalAccess – what is the generic for cialis

https://tadalaccess.com/# when should you take cialis

cialis for performance anxiety TadalAccess cialis canada pharmacy no prescription required

cialis a domicilio new jersey: tadalafil generic in usa – cialis 20 mg price costco

https://tadalaccess.com/# canada pharmacy cialis

cialis online canada ripoff: cialis before and after pictures – does tadalafil work

bph treatment cialis sublingual cialis or when will generic tadalafil be available

https://maps.google.co.th/url?sa=t&url=https://tadalaccess.com cialis free trial voucher

tadalafil 5mg generic from us cialis copay card and cialis free trial wallmart cialis

levitra vs cialis TadalAccess cialis generic name

cialis canada pharmacy no prescription required shop for cialis or cialis walmart

http://images.google.com.sb/url?q=https://tadalaccess.com tadalafil troche reviews

tadalafil ingredients tadalafil citrate and cialis website where can i get cialis

cialis how long cialis sell or cialis for ed

http://www.boostersite.com/vote-1387-1371.html?adresse=tadalaccess.com/jeuxvideopc/accueil.html cialis difficulty ejaculating

mambo 36 tadalafil 20 mg cialis 5 mg and cialis online pharmacy australia india pharmacy cialis

cialis online without prescription: Tadal Access – how to take liquid tadalafil

https://tadalaccess.com/# cialis daily

cialis dose: safest and most reliable pharmacy to buy cialis – tadalafil tablets 40 mg

letairis and tadalafil: Tadal Access – what to do when cialis stops working

https://tadalaccess.com/# where to buy generic cialis ?

generic tadalafil canada: Tadal Access – cialis tadalafil 20mg price

walmart cialis price cheap tadalafil no prescription or cialis generics

https://www.google.bs/url?sa=t&url=https://tadalaccess.com over the counter cialis walgreens

no prescription cialis cialis with out a prescription and san antonio cialis doctor cialis generic

cialis patient assistance mint pharmaceuticals tadalafil reviews cialis 20 mg price walmart

buy tadalafil online no prescription: TadalAccess – maxim peptide tadalafil citrate

tadalafil citrate liquid what does cialis cost or cialis tadalafil cheapest online

https://cse.google.com.bo/url?sa=t&url=https://tadalaccess.com printable cialis coupon

cialis super active reviews cialis erection and letairis and tadalafil when should you take cialis

https://tadalaccess.com/# cialis over the counter usa

cialis generic timeline cialis 20 mg price costco or tadalafil vs sildenafil

http://cse.google.pl/url?sa=i&url=https://tadalaccess.com walmart cialis price

generic cialis tadalafil 20mg india purchase brand cialis and what happens if a woman takes cialis cialis free trial phone number

cialis generic best price that accepts mastercard: TadalAccess – reliable source cialis

cialis windsor canada when is generic cialis available cheapest 10mg cialis

cialis tadalafil: trusted online store to buy cialis – tadalafil citrate bodybuilding

https://tadalaccess.com/# evolution peptides tadalafil

cialis picture: what is the normal dose of cialis – best price on generic cialis

purchase cialis online cheap cialis 20mg price or walmart cialis price

https://artwinlive.com/widgets/1YhWyTF0hHoXyfkbLq5wpA0H?generated=true&color=dark&layout=list&showgigs=4&moreurl=https://tadalaccess.com e-cialis hellocig e-liquid

sildalis sildenafil tadalafil cialis 10 mg and buy tadalafil no prescription where to buy generic cialis

buying cialis: Tadal Access – does cialis make you harder

tadalafil softsules tuf 20 Tadal Access what does cialis do

https://tadalaccess.com/# cialis patient assistance

combitic global caplet pvt ltd tadalafil best time to take cialis 5mg or canada drugs cialis

https://cse.google.co.ck/url?sa=t&url=https://tadalaccess.com cialis super active plus reviews

cialis 5mg price cvs buy cialis united states and cialis canada pharmacy no prescription required buy cialis usa

cialis best price cialis for sale toronto or difference between tadalafil and sildenafil

https://wakeuplaughing.com/phpinfo.php?a=prince+mattress+( cialis tadalafil 5mg once a day

cialis dose what happens when you mix cialis with grapefruit? and cialis pricing cialis alternative

cialis softabs online: order cialis no prescription – cialis for sale toronto

cialis how does it work: cialis walmart – best place to get cialis without pesricption

cheap cialis TadalAccess recreational cialis

https://tadalaccess.com/# letairis and tadalafil

purchasing cialis online: cialis definition – benefits of tadalafil over sidenafil

generic tadalafil prices: tadalafil 20mg canada – cialis generic online

https://tadalaccess.com/# cialis pills for sale

buying cialis online canadian order cheap canadian cialis what does cialis do

what is tadalafil made from cialis coupon online or order generic cialis

https://www.google.com.gi/url?q=https://tadalaccess.com generic tadalafil 40 mg

is cialis a controlled substance tadalafil cheapest online and buy cialis without prescription cialis experience

cheap cialis for sale cialis indien bezahlung mit paypal or cialis 20 mg duration

https://image.google.com.sb/url?q=https://tadalaccess.com cialis stopped working

sublingual cialis tadalafil liquid fda approval date and tadalafil vidalista cialis free trial offer

pregnancy category for tadalafil take cialis the correct way or buy generic cialis

http://burgman-club.ru/forum/away.php?s=https://tadalaccess.com side effects of cialis daily

tadalafil softsules tuf 20 cialis with dapoxetine and what is cialis prescribed for whats cialis

bph treatment cialis: cialis cost per pill – best place to buy tadalafil online

what is the use of tadalafil tablets: TadalAccess – is there a generic cialis available

https://tadalaccess.com/# cialis dopoxetine

reddit cialis how long does it take for cialis to start working cialis 20mg tablets

buying cheap cialis online: Tadal Access – cialis definition

https://tadalaccess.com/# cialis precio

when will teva’s generic tadalafil be available in pharmacies TadalAccess cialis for daily use cost

cialis tadalafil 5mg once a day buy tadalafil online no prescription or tadalafil (tadalis-ajanta) reviews

https://maps.google.co.ao/url?sa=t&url=https://tadalaccess.com cheap generic cialis canada

cheap cialis pills uk what is the difference between cialis and tadalafil and cialis vs flomax cialis com coupons

buying cialis: cialis generic cvs – cheap tadalafil no prescription

cialis and adderall cialis insurance coverage or cialis male enhancement

https://toolbarqueries.google.ge/url?q=http://tadalaccess.com cialis buy

tadalafil 10mg side effects cialis 100mg from china and cialis dapoxetine overnight shipment cialis buy online

cialis recommended dosage: TadalAccess – cialis before and after pictures

https://tadalaccess.com/# comprar tadalafil 40 mg en walmart sin receta houston texas

cialis canadian pharmacy Tadal Access cialis available in walgreens over counter??

bph treatment cialis: Tadal Access – tadalafil prescribing information

https://tadalaccess.com/# canadian pharmacy cialis 20mg

online cialis prescription: cialis generic purchase – cialis purchase

cialis tadalafil 5mg once a day cialis 20mg tablets cialis 10mg price

cialis price: levitra vs cialis – cialis before and after

cialis 20 mg cialis online canada or cialis in canada

http://rusnor.org/bitrix/redirect.php?event1=&event2=&event3=&goto=https://tadalaccess.com/ canada cialis generic

cialis website pastilla cialis and whats cialis san antonio cialis doctor

cialis 5 mg tablet cialis where can i buy or poppers and cialis

https://clients1.google.com.sl/url?q=https://tadalaccess.com cialis online without a prescription

is generic tadalafil as good as cialis order cialis from canada and average dose of tadalafil cialis when to take

https://tadalaccess.com/# mail order cialis

tadalafil citrate liquid: Tadal Access – cialis 20 mg price walmart

how to buy cialis how long i have to wait to take tadalafil after antifugal or achats produit tadalafil pour femme en ligne

https://www.miss-sc.org/Redirect.aspx?destination=http://tadalaccess.com/ buy cialis tadalafil

cialis tablets for sell cialis super active vs regular cialis and cheap cialis cialis dapoxetine australia

order cialis from canada Tadal Access cialis overnight deleivery

cialis vs tadalafil: tadalafil troche reviews – cialis drug

https://tadalaccess.com/# cialis using paypal in australia

how to get cialis without doctor: cialis generic cost – cheap canadian cialis

sildalis sildenafil tadalafil: buy cialis overnight shipping – e20 pill cialis

cialis for daily use reviews TadalAccess cialis price

https://tadalaccess.com/# cialis 100mg review

cialis price cialis price walmart or when will generic tadalafil be available

https://www.google.tn/url?q=https://tadalaccess.com buy tadalafil powder

cialis tadalafil 20mg price buy cialis tadalafil and how well does cialis work cialis not working first time

cialis otc switch what possible side effect should a patient taking tadalafil report to a physician quizlet or cialis com free sample

http://www.sprang.net/url?q=https://tadalaccess.com cialis 20 mg best price

can i take two 5mg cialis at once cialis tubs and does tadalafil work cialis canada price

what is the cost of cialis: cialis generic 20 mg 30 pills – purchase generic cialis

cialis genetic: Tadal Access – cialis instructions

when is the best time to take cialis how many 5mg cialis can i take at once or safest and most reliable pharmacy to buy cialis

http://go.iranscript.ir/index.php?url=https://tadalaccess.com cialis generic 20 mg 30 pills

walgreen cialis price cialis 20mg side effects and cialis for bph cialis india

canadian pharmacy generic cialis TadalAccess tadalafil dapoxetine tablets india

https://tadalaccess.com/# cialis for bph

cialis by mail: sildenafil vs tadalafil which is better – cialis pharmacy

non prescription cialis: TadalAccess – cialis strength

cialis indications cialis 5mg price walmart tadalafil without a doctor prescription

https://tadalaccess.com/# cialis pricing

mambo 36 tadalafil 20 mg reviews cheap t jet 60 cialis online or cialis sublingual

https://cse.google.com.jm/url?sa=t&url=https://tadalaccess.com generic cialis

printable cialis coupon cialis price canada and order cialis online cheap generic is there a generic cialis available

how long does cialis stay in your system: great white peptides tadalafil – cheapest cialis

does medicare cover cialis for bph: Tadal Access – canadian no prescription pharmacy cialis

cialis 5mg price walmart cialis erection or cialis com coupons

http://www.linkestan.com/frame-click.asp?url=http://tadalaccess.com/ cialis drug

cialis paypal canada what is the difference between cialis and tadalafil? and cialis black 800 mg pill house cialis side effects a wife’s perspective

cialis patent expiration TadalAccess sildenafil vs tadalafil vs vardenafil

https://tadalaccess.com/# how to buy cialis

buy cialis from canada: TadalAccess – cialis bodybuilding

achats produit tadalafil pour femme en ligne: TadalAccess – cialis pills online

cialis tadalafil 20 mg cialis information buy tadalafil cheap online

https://tadalaccess.com/# prescription free cialis

cialis super active real online store: cialis buy australia online – tadalafil liquid fda approval date

recreational cialis: online cialis – cialis tadalafil 10 mg

tadalafil generico farmacias del ahorro cialis commercial bathtub or cialis generic timeline 2018

http://www.google.td/url?q=https://tadalaccess.com cialis no perscrtion

tadalafil dose for erectile dysfunction how long does it take cialis to start working and letairis and tadalafil tadalafil 5 mg tablet

tadalafil without a doctor prescription how long does cialis take to work buy cialis pro

pictures of cialis pills cialis wikipedia or buy voucher for cialis daily online

https://cse.google.com.ng/url?sa=t&url=https://tadalaccess.com cialis coupon walgreens

when is generic cialis available cialis price walmart and tadalafil generico farmacias del ahorro order cialis online cheap generic

https://tadalaccess.com/# cialis free 30 day trial

how well does cialis work: TadalAccess – cialis 10mg price

cialis canada over the counter cialis online pharmacy australia cialis com free sample

canadian online pharmacy cialis: how to get cialis without doctor – cialis definition

buy liquid tadalafil online: tadalafil long term usage – how long does cialis take to work 10mg

https://tadalaccess.com/# difference between tadalafil and sildenafil

buy cialis without prescription is generic tadalafil as good as cialis or cialis from mexico

https://cse.google.dk/url?q=https://tadalaccess.com cialis 20 mg from united kingdom

tadalafil 20mg buy cialis 20mg and cialis free trial voucher why does tadalafil say do not cut pile

online cialis prescription most recommended online pharmacies cialis tadalafil soft tabs

cialis side effects forum: TadalAccess – cialis no prescription overnight delivery

cialis online canada cialis samples or cialis 80 mg dosage

http://images.google.gm/url?q=https://tadalaccess.com cialis by mail

tadalafil canada is it safe tadalafil medication and cialis no perscrtion buy tadalafil powder

cialis drug interactions buying cialis in canada or <a href=" http://k71.saaa.co.th/phpinfo.php?a=side+effects+of+sildenafil “>buy cheap tadalafil online

https://images.google.com.ai/url?sa=t&url=https://tadalaccess.com paypal cialis no prescription

cialis manufacturer does cialis lower your blood pressure and maximum dose of cialis in 24 hours cialis doesnt work for me

https://tadalaccess.com/# cialis para que sirve

buy cialis canadian cialis super active vs regular cialis where can i get cialis

cheap cialis 5mg: cialis commercial bathtub – mambo 36 tadalafil 20 mg reviews

cialis buy online canada buy tadalafil online no prescription or when does cialis go generic

https://clipperfund.com/?URL=https://tadalaccess.com:: cialis dapoxetine overnight shipment

order cialis from canada cialis canada over the counter and tadalafil cheapest online cialis for bph

how long does it take for cialis to take effect: TadalAccess – cialis erection

https://tadalaccess.com/# cialis 5mg daily how long before it works

cialis 5mg daily Tadal Access cialis price cvs

where to get generic cialis without prescription: buy cialis pro – purchase brand cialis

sanofi cialis otc Tadal Access cialis 2.5 mg

side effects cialis tadalafil and sildenafil taken together or cialis 5mg cost per pill

http://www.mozakin.com/bbs-link.php?tno=&url=tadalaccess.com/ cialis images

cialis manufacturer cheapest cialis 20 mg and best place to buy tadalafil online cialis patent expiration date

https://tadalaccess.com/# erectile dysfunction tadalafil

where to buy cialis soft tabs: TadalAccess – best price for tadalafil

cialis how to use cialis tadalafil 10 mg cialis stories

https://tadalaccess.com/# cialis manufacturer coupon

cialis street price: TadalAccess – cialis pill

tadalafil generic reviews tadalafil generic headache nausea cialis 10mg price

cialis at canadian pharmacy: Tadal Access – cialis black in australia

ambrisentan and tadalafil combination brands tadalafil best price 20 mg or ordering tadalafil online

http://dndetails.com/whois/show.php?ddomain=tadalaccess.com paypal cialis payment

online pharmacy cialis cialis from canadian pharmacy registerd and cialis 20mg review cialis covered by insurance

https://tadalaccess.com/# cialis sample request form

price of cialis in pakistan cialis not working cialis dosage reddit

cialis generic timeline 2018 what is the cost of cialis or how to get cialis prescription online

https://smccd.edu/disclaimer/redirect.php?url=https://tadalaccess.com/ cialis results

cialis alternative cialis para que sirve and buy generic cialis 5mg buy cialis united states

cialis once a day tadalafil 5 mg tablet or over the counter cialis walgreens

https://maps.google.dj/url?sa=t&url=https://tadalaccess.com cialis is for daily use

purchase cialis online cialis no prescription and cialis samples cheap cialis 20mg

cialis alternative: TadalAccess – is there a generic cialis available?

https://tadalaccess.com/# purchasing cialis

cialis vs flomax for bph tadalafil 20mg (generic equivalent to cialis) cialis genetic

paypal cialis no prescription: buy cialis toronto – canadian pharmacy cialis 20mg

buy cialis usa cialis lower blood pressure tadalafil review forum

cialis bodybuilding cialis before and after photos or what does cialis look like

https://clients1.google.com.eg/url?q=https://tadalaccess.com cialis side effects

buy cialis online canada tadalafil and ambrisentan newjm 2015 and cialis para que sirve vardenafil and tadalafil

https://tadalaccess.com/# walmart cialis price

cialis active ingredient cialis drug or how much does cialis cost with insurance

https://images.google.mu/url?sa=t&url=https://tadalaccess.com buying generic cialis online safe

buying generic cialis vardenafil vs tadalafil and oryginal cialis cialis review

how many 5mg cialis can i take at once Tadal Access what is the difference between cialis and tadalafil?

how long i have to wait to take tadalafil after antifugal: cialis generic timeline 2018 – when will cialis become generic

antibiotic without presription: BiotPharm – cheapest antibiotics

https://eropharmfast.shop/# cheapest ed online

Ero Pharm Fast: Ero Pharm Fast – erectile dysfunction online prescription

online prescription for ed: erectile dysfunction drugs online – Ero Pharm Fast

buy antibiotics over the counter Biot Pharm Over the counter antibiotics for infection

Licensed online pharmacy AU: PharmAu24 – Online medication store Australia

Pharm Au24: pharmacy online australia – Pharm Au 24

Buy medicine online Australia: online pharmacy australia – Buy medicine online Australia

http://eropharmfast.com/# top rated ed pills

get ed meds today: Ero Pharm Fast – order ed meds online

over the counter antibiotics: buy antibiotics online uk – buy antibiotics

Ero Pharm Fast buy ed meds online Ero Pharm Fast

online pharmacy australia: Pharm Au 24 – Pharm Au24

buy antibiotics for uti: BiotPharm – best online doctor for antibiotics

https://biotpharm.shop/# buy antibiotics for uti

online pharmacy australia: PharmAu24 – Discount pharmacy Australia

ed pills cheap edmeds or affordable ed medication

https://toolbarqueries.google.gp/url?q=https://eropharmfast.com buy ed medication

best online ed pills cheapest ed medication and online ed meds ed drugs online

https://biotpharm.com/# over the counter antibiotics

get antibiotics quickly: BiotPharm – buy antibiotics online

over the counter antibiotics buy antibiotics for uti or Over the counter antibiotics for infection

http://www.bayanay.info/forum-oxota/away.php?s=http://biotpharm.com Over the counter antibiotics for infection

buy antibiotics for uti Over the counter antibiotics for infection and buy antibiotics from india get antibiotics quickly

Ero Pharm Fast Ero Pharm Fast Ero Pharm Fast

get antibiotics without seeing a doctor: BiotPharm – buy antibiotics

get antibiotics without seeing a doctor: Biot Pharm – buy antibiotics online

http://eropharmfast.com/# buy ed pills online

Online medication store Australia: Online medication store Australia – Medications online Australia

cheapest erectile dysfunction pills ed prescription online Ero Pharm Fast

buy antibiotics online: buy antibiotics – cheapest antibiotics

discount ed pills discount ed pills or online ed pills

https://cse.google.com.ph/url?q=https://eropharmfast.com best online ed medication

online ed medications ed medicines and erectile dysfunction meds online ed treatments online

Ero Pharm Fast: Ero Pharm Fast – erectile dysfunction online prescription

PharmAu24 PharmAu24 or Online medication store Australia

https://images.google.ws/url?sa=t&url=https://pharmau24.shop Buy medicine online Australia

Pharm Au 24 Licensed online pharmacy AU and Pharm Au 24 Medications online Australia

buy antibiotics buy antibiotics online or Over the counter antibiotics pills

https://image.google.td/url?q=https://biotpharm.com buy antibiotics from canada

buy antibiotics online buy antibiotics over the counter and buy antibiotics online over the counter antibiotics

buy ed meds cheapest online ed treatment Ero Pharm Fast

online pharmacy australia: Pharm Au 24 – Online drugstore Australia

cheapest ed treatment low cost ed meds online or ed rx online

http://cse.google.com.jm/url?sa=t&url=http://eropharmfast.com edmeds

ed pills buy erectile dysfunction medication and online ed prescription cheap ed

over the counter antibiotics buy antibiotics online or buy antibiotics from india

https://images.google.ps/url?sa=t&url=https://biotpharm.com get antibiotics without seeing a doctor

buy antibiotics buy antibiotics and best online doctor for antibiotics best online doctor for antibiotics

buy ed meds online п»їed pills online or <a href=" https://pharmacycode.com/catalog-_hydroxymethylglutaryl-coa_reductase_inhibitors.html?a=buy erectile dysfunction medication

https://maps.google.com.ni/url?q=https://eropharmfast.com pills for ed online

=to+buy+viagra]erectile dysfunction online prescription ed meds by mail and cheap ed medication cheap ed